Live Semantic Search with DuckDB, BERT, and LLama.cpp

Semantic vs Lexical Search

When searching a set of documents there are, in general, two kinds of search: lexical search and semantic search. Lexical searches match vocabulary or words. Search for “frog” and get documents that contain the word frog. Easy. Some lexical approaches will stem the word so if you search for running you will get documents that contain run.

I have used this sort of lexical search via SQlite’s FTS5 feature. This lets anyone create a virtual table, populate it with text, and then search it with the MATCH keyword. For example in my search_transcripts package, I use FTS5 to create a table of transcript chunks and times, and then one can search the table with SQL like:

select bm25(search_data) as score, * from search_data where text MATCH ? order by score;where bm_25 is the BM25 ranking function, a commonly used algorithm for lexical searching.

Semantic searches are focused not on matching words but meaning. Lexical searches are focused if and how ofter a search phrase appear in the document. However, if you search for “animal”, and you have a document that is “I love cats”, it will not match that document since the word animal is missing. Here’s the BERT Sentence transformer documentation:

The idea behind semantic search is to embed all entries in your corpus, whether they be sentences, paragraphs, or documents, into a vector space. At search time, the query is embedded into the same vector space and the closest embeddings from your corpus are found. These entries should have a high semantic overlap with the query.

Embed all entries into vector space is a fancy way to say that we need a model to turn “I love cats” into an array of numbers, as well as “animal.” Then we can compute cosine distances (or other vector distances) between these two lists of numbers to figure out if how close they are in vector space.

BERT Sentence Transformers

BERT Sentence Transformers is a Python package, back-ended by PyTorch, that can turn words and sentences into embedding vectors. “SentenceTransformers is a Python framework for state-of-the-art sentence, text and image embeddings.” It comes with a variety of pre-trained models. The documentation has details on their training data. By building a model around many real-world documents, the model will encode “cat” and “lion” in such a way that these vectors will be close to each other.

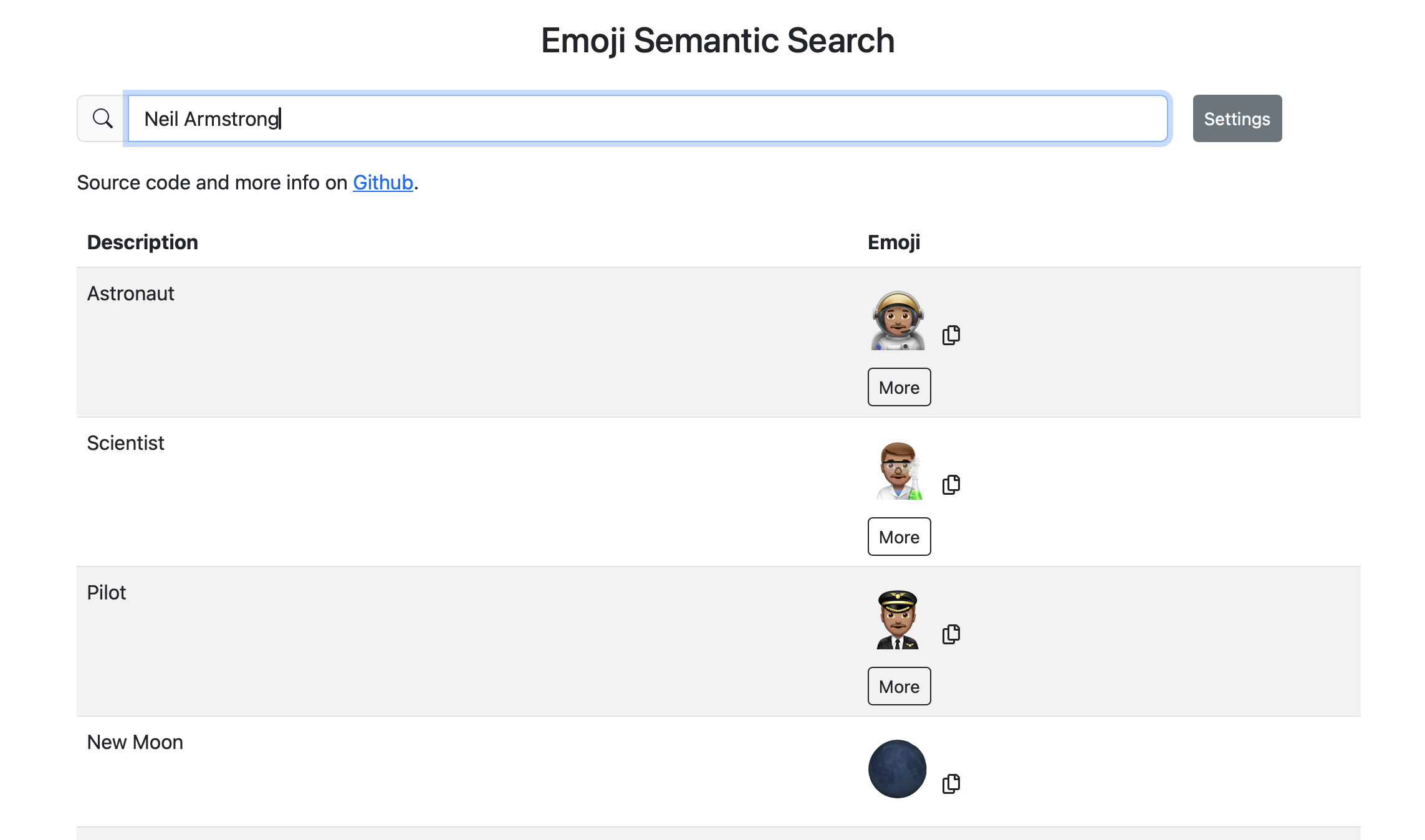

A Semantic Example : Emoji search

My semantic Emoji search uses a sentence transformer model to encode the text description of each emoji (i.e. for 🎟️, I encode the string admission tickets ) into an embedding. I do this for all 1000+ emojis ahead of time using the SentenceTransformers module.

However, to find the relevant semantic emoji for “launch”, I need to turn launch into its own embedding, then compute cosine similarity for 1000+ emojis and find the closest results. The cosine similarity computations are reasonably quick, but loading the SentenceTranformers module and its dependencies (including PyTorch) uses about 1GB of memory. Since all of my Python web apps run on a small Digital Ocean droplet, turning a search term into an embedding live was not practical using SentenceTranformers.

So, instead I created embeddings for the 40,000 most common english words, based on the GloVe dataset. I precomputed all the distances between these search terms and the emoji text description, and pre-select the top 25 best emojis for my 40,000 search terms. Everything is precomputed, and the main engine of my emoji search is a simple SQLite query of a relatively small database. However, it only works on single word searches.

Embedding with llama.cpp: A low memory breakthrough

The key for me supporting live search with multiple word queries came when llama.cpp added support for BERT. Llama.cpp, as you can probably tell my the name, is designed to run large language models (“Inference of Meta’s LLaMA model (and others) in pure C/C++”), but without Python or any other dependencies.

Now, creating a compatible BERT model, and running it with llama.cpp’s Python bindings, I can generate new embeddings for a search term on the fly, without importing sentence-transfomers, since the llama-cpp-python library has only a few dependencies, and doesn’t require PyTorch. Loading in a model this way only uses ~100MB or so of memory.

import llama_cpp

model = llama_cpp.Llama(model_path=model_path,

embedding=True,

verbose=False)

arr = model.create_embedding(text)['data'][0]['embedding']Easy array comparisons thanks to DuckDB

At about the same time llama.cpp added BERT model support, DuckDB 0.10.0 came out with fixed length array support, and corresponding functions like array_cosine_similarity. With a database of precomputed embedding vectors for all the emojis, I can now retrieve semantically similar results with something like:

import duckdb

import llama_cpp

model = llama_cpp.Llama(model_path=model_path,

embedding=True,

verbose=False)

query_arr = model.create_embedding(text)['data'][0]['embedding']

con = duckdb.connect('vectors.db')

con.sql("select id,array_cosine_similarity(arr,?::DOUBLE[384]) as similarity from array_table order by similarity desc limit 25;",

params=(query_arr, )).to_df()Ready for 🚀

Today, in latest iteration of my semantic Emoji finder, I still use the precomputed database. If nothing is found (either an uncommon word or a longer phrase), it creates a new embedding with llama.cpp, and then use a DuckDB database to find the most relevant emojis and return the results. The new code to retrieve the results with DuckDB is a mere 21 lines, and the code to create the DuckDB vector table is 11 lines.

The Dash app uses slightly more memory now, and the live searches take 100-200 milliseconds rather than ~50ms for the static SQLite lookup. However, it adds functionality and whimsy to be able to search for any term (even occasionally a famous name), so I think it’s definitely worth it!